| Item name |

Required/Optional |

Use of Variables |

Description |

Supplement |

| Destination |

Required |

Not Available |

Select Global Resources.

- [Add...]:

Add new global resource.

- [Edit...]:

Global resource settings can be edited by [Edit Resource list].

|

|

| Table Name |

Required |

Not Available |

Select the name of a table in Salesforce. |

|

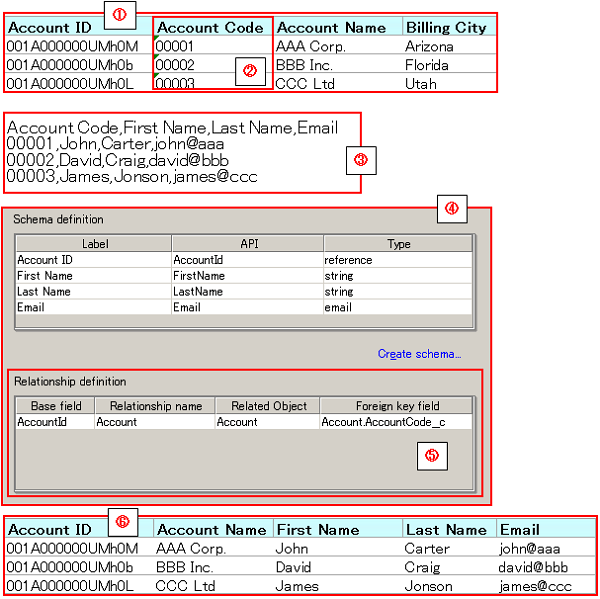

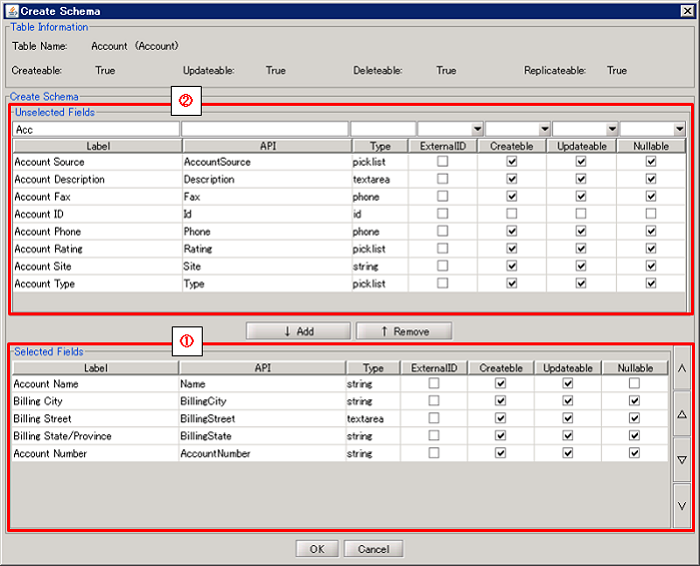

| Schema definition |

Required |

- |

Specify an item for the CSV data's header row, to be registered with the batch. |

Limiting the settings to only items needed to be written to Salesforce (deleting unnecessary items from the Schema Definition) helps improve processing performance. Limiting the settings to only items needed to be written to Salesforce (deleting unnecessary items from the Schema Definition) helps improve processing performance. Items whose data type are defined as "base 64" cannot be handled. If these items are selected, an error occurs. Items whose data type are defined as "base 64" cannot be handled. If these items are selected, an error occurs.

|

| Schema definition/Label |

Required |

Not Available |

Display the lable name of the column of the table specified in [TableName]. |

|

| Schema definition/API |

Required |

Not Available |

Display the API name of the column of the table specified in [TableName]. |

|

| Schema definition/Type |

Required |

Not Available |

Display the data type of the row of the table specified in [TableName]. |

|

| Relationship definition |

Optional |

- |

In the case that relationship items exist in the Schema Definition, set items subject to updating by external key. |

- By selecting the external key item of the relation object, data passing with relations can be performed.

For details, please refer to Relationship Definition. For details, please refer to Relationship Definition.

|

| Relationship definition/Base field |

Required |

Not Available |

Display the API Name of the row of the relation item of the table specified in [Table Name]. |

|

| Relationship definition/Relationship name |

Required |

Not Available |

Display the relationship name of the row of the relation item of the table specified in [Table Name]. |

|

| Relationship definition/Related Object |

Optional |

Not Available |

Select the API Name of the relation counterpart object in the relation item column of the table specified in [Table Name]. |

|

| Relationship definition/Foreign key field |

Optional |

Not Available |

Select the external key item of the relation counterpart object in the relation item column of the table specified in [Table Name]. |

- If omitted, the ID of the relevant record of the relation counterpart object will be passed.

|

| Item name |

Required/Optional |

Use of Variables |

Description |

Supplement |

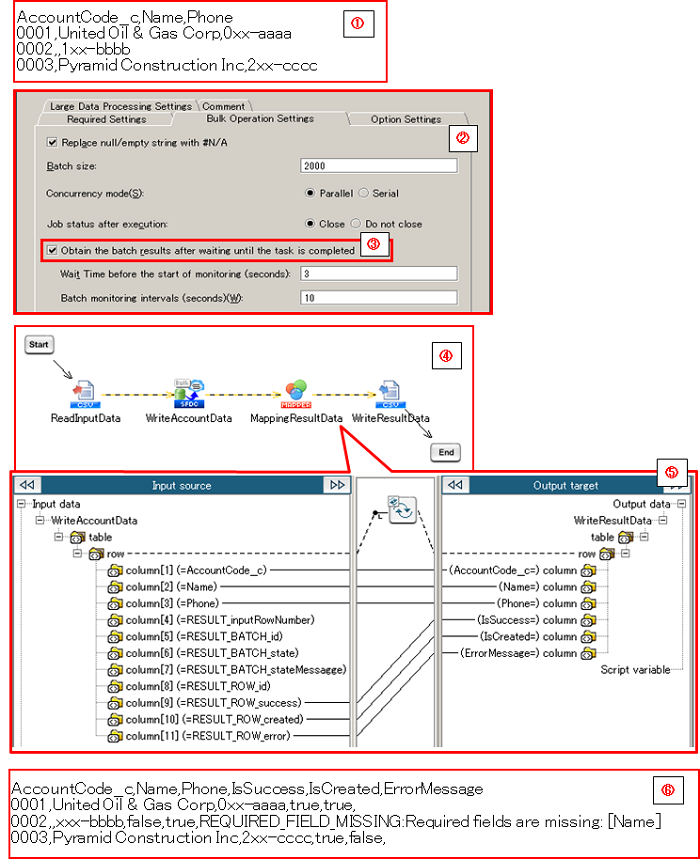

| Replace null/empty string with #N/A |

Required |

Not Available |

In the case that the input data is null or null string, select whether or not to replace it with the string "#N/A."

- [Checked]:(default)

Replace the string.

- [Not Checked]:

Do not replace the string.

|

Due to the API specification, "#N/A" needs to be selected if data is updated with null. Due to the API specification, "#N/A" needs to be selected if data is updated with null.

|

| Batch size |

Required |

Available |

Input the upper limit of the number of records that can be registered to one batch. |

- The default value is "2000."

- You can set the value between 1 and 10000. An error message will be shown if a value outside this range is set.

As the specification of this adapter, in a case in which 100,000 cases of data is written, and the batch size is set as 2,000, 50 batches will be created. As the specification of this adapter, in a case in which 100,000 cases of data is written, and the batch size is set as 2,000, 50 batches will be created.

However, in cases where 1 batch exceeds the API limit of 10 MB, the batch will be created with a size smaller than the specified batch size.

|

| Concurrency mode |

Required |

Not Available |

Select the job's simultaneous execution mode.

- [Parallel]:(default)

The job is created with the parallel processing mode.

- [Serial]:

The job is created with the sequential processing mode.

|

If parallel processing is performed, database rivalry can occur. If the competition is large, readings can fail. If parallel processing is performed, database rivalry can occur. If the competition is large, readings can fail.

If the consecutive mode is used, the batches will be reliably processed one after another. However, with this option, the reading processing time can increase greatly.

|

| Job status after execution |

Required |

Not Available |

Select whether or not a created job will be closed, after execution.

- [Close]:(default)

The job will be closed after execution.

- [Do not close]:

The job will not be closed after execution. It will remain open.

|

|

| Obtain the batch results after waiting until the task is completed |

Required |

Not Available |

Select whether or not to monitor the situation until the created batches are finished, and to obtain batch results.

- [Checked]:(default)

Obtain batch results. The obtained batch results can be passed on to the subsequent proccessing from the output schema.

- [Not Checked]:

Do not obtain batch results.

|

- If [Checked] is selected, the item specified as the schema definition, as well as the result information item, can be passed onto the subsequenct proccessing as Table Model type data.

Please refer to "Schema" for information to be obtained. Please refer to "Schema" for information to be obtained. Please refer to "Usage Examples of Get Results" for examples. Please refer to "Usage Examples of Get Results" for examples.

|

| Wait Time before the start of monitoring (sec) |

Optional |

Available |

Input the waiting time, until the beginning of the batch state monitoring, in seconds.

|

- The default value is "3".

- Will be valid only when [Obtain the batch results after waiting until the task is completed] is checked.

- A value of 3 or greater and 10800 or smaller may be specified. If a value is specified outside of this range, an error will occur.

|

| Batch monitoring intervals (sec) |

Optional |

Available |

Input the intervals, which determine whether or not the batch results have been obtained and the process has completed, in seconds. |

- The default value is "10".

- Will be valid only when [Obtain the batch results after waiting until the task is completed] is checked.

- A value of 10 or greater and 600 or smaller can be specified. If a value is specified outside of this range, and error will occur.

|

| Item name |

Description |

Supplement |

| Display Table Information... |

You can check the table structure of the object you are operating.

For more information on how to view the table structure, please refer to Display Table Information For more information on how to view the table structure, please refer to Display Table Information |

- Click [Load All Table Information....] if you would like to check the table structure of other objects.

|

| Load All Table Information |

Retrieve all possible table information

After running, you can verify the retrieved information from [Table Information]. |

|

| Read schema definition from file... |

Select a file from the file chooser and read the name of field API on the first line of the file specified in the comma-separated values. Set this as the schema definition. |

- Please specify "UTF-8" encoding for the selected file.

|

| Element Name |

Column Name(Label/API) |

Description |

Supplement |

| row |

- |

Repeats as many times as the number of data given to the input schema. |

- In the case where [Obtain the batch results after waiting until the task is completed] is not checked, the result will be 0 times.

|

| column |

RESULT_inputRowNumber |

A number will be output, based on the position of the node in the data that was given to the input schema. |

|

| RESULT_BATCH_id |

The IDs of the batches that processed the records will be output. |

|

| RESULT_BATCH_state |

The state of the batches that processed the records will be output.

- [Completed]:The process has completed.

- [Failed]:The process was not successful. Please verify the [RESULT_BATCH_stateMessage].

|

- In the [Completed] case as well, the results for each record will vary.

Please verify the "ResultInformation_Record."

|

| RESULT_BATCH_stateMessage |

The state message of the batches that processed the records will be output. |

|

| RESULT_ROW_id |

The record ID is output. |

|

| RESULT_ROW_success |

The record's success flag is output.

- [true]:The process was successful.

- [false]:The process was unsuccessful. Please verify the [RESULT_ROW_error].

|

- In the case where the [RESULT_BATCH_state] is not [Completed], the outcome will be [false].

|

| RESULT_ROW_created |

The record's new creation flag is output.

- [true]:A record was newly created.

- [false]:A record was not newly created.

|

|

| RESULT_ROW_error |

The record's error message is output. In the case where the batch results cannot be obtained, the state message of the batches that processed the records will be output. |

|

| Component Variable Name |

Description |

Supplement |

| job_id |

The IDs of the jobs created are stored. |

- The default value is null.

|

| read_count |

The number of input data is stored. |

- The default value is null.

|

| created_batch_count |

The number of batches created is stored. |

- The default value is null.

|

| get_result_success_count |

The number of data that was successful in the processing is stored. |

- The default value is null.

- If [Obtain the batch results after waiting until the task is completed] is not checked, a value will not be stored.

|

| get_result_error_count |

The number of data that was unsuccessful in the processing is stored. |

- The default value is null.

- If [Obtain the batch results after waiting until the task is completed] is not checked, a value will not be stored.

|

| server_url |

The end point URL after Login is stored. |

- The default value is null.

|

| session_id |

The session Id is stored. |

- The default value is null.

|

| message_category |

In the case that an error occurs, the category of the message code corresponding to the error is stored. |

- The default value is null.

|

| message_code |

In the case that an error occurs, the code of the message code corresponding to the error is stored. |

- The default value is null.

|

| message_level |

In the case that an error occurs, the importance of the message code corresponding to the error is stored. |

- The default value is null.

|

| operation_api_exception_code |

The ExceptionCode of the occured error, in a case of API error, is stored. |

- The default value is null.

- For any error other than an API Error, the value is not stored.

The content to be stored may change according to the version of DataSpider Servista. The content to be stored may change according to the version of DataSpider Servista.

|

| operation_error_message |

If an error occurs, the error message of the occured error is stored. |

- The default value is null.

The content to be stored may change according to the version of DataSpider Servista. The content to be stored may change according to the version of DataSpider Servista.

|

| operation_error_trace |

When an error occurs, the trace information of the occured error is stored. |

- The default value is null.

The content to be stored may change according to the version of DataSpider Servista. The content to be stored may change according to the version of DataSpider Servista.

|

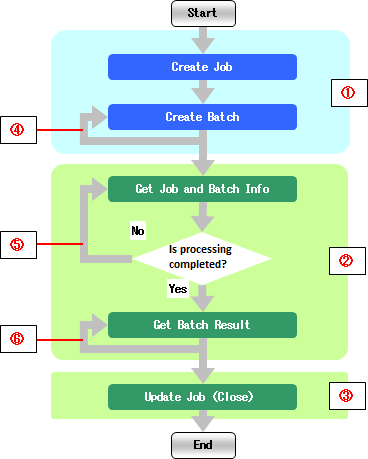

| Number in the Image |

Name |

Description |

Supplement |

| (1) |

Required Processes |

These are mandatory processes that will take place regardless of the property settings. |

- A process will not take place, only if there are 0 numbers of input data.

|

| (2) |

Selection of Whether or Not to Execute the Batch Result Obtainment and Job/Batch Information Obtainment |

Whether or not to execute can be selected by the value of the [Obtain the batch results after waiting until the task is completed] in the [Bulk Operation Settings] tab. |

- If the [Obtain the batch results after waiting until the task is completed] is checked, the process will take place.

|

| (3) |

Selection of whether or not to execute the Job Update (Close) |

Whether or not to execute can be selected by the value of the [Job status after execution] in the [Bulk Operation Settings] tab. |

- If "Close" is selected for the [Job status after execution], the process will take place.

|

| (4) |

Repetition of the Batch Creation |

The batch creation will repeat, depending on the number of insert data or its volume. |

|

| (5) |

Repetition of the Job/Batch Result Obtainment |

Until the process is completed ,the job/batch information obtainment will repeat at intervals of the [Batch monitoring intervals (sec)], of the [Bulk Operation Settings] tab. |

|

| (6) |

Repetition of the Batch Result Obtainment |

Batch results will be obtained repeatedly, the same amount of times as the number of batches created in (4). |

|

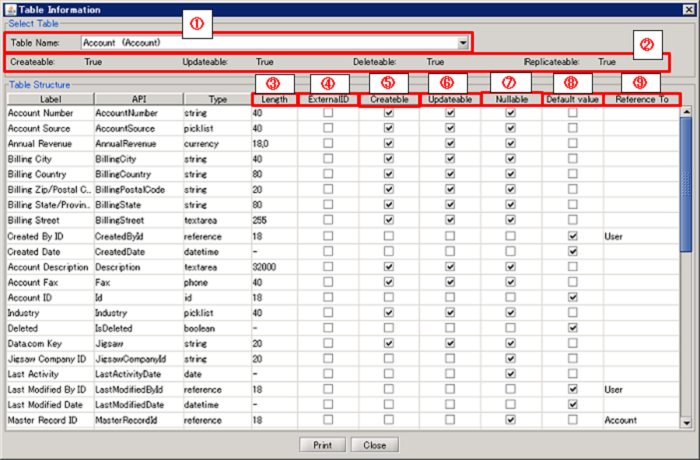

| Number in the Image |

Name |

Description |

Supplement |

| (1) |

Table Name |

Select the table whose structure to be shown. |

|

| (2) |

Table Information |

Display the available operations on the selected table. |

|

| (3) |

Length |

Display the Number of Digit of item |

|

| (4) |

External ID |

Display whether or not the object item is set as an external ID. |

|

| (5) |

Createble |

Display whether or not it can be set a value when adding data. |

|

| (6) |

Updatable |

Display whether or not it can be set a value when updating data. |

|

| (7) |

Nullable |

Display whether or not it can be set NULL when adding or updating data. |

|

| (8) |

Default value |

Display whether or not Salesforce automatically set a default value when adding data. |

|

| (9) |

Reference To |

Display the referring object name if the item is in reference relationship or master-servant relationship. |

|

| Exception Name |

Reason |

Resolution |

ResourceNotFoundException

Resource Definition is Not Found. Name:[] |

[Destination] is not specified. |

Specify [Destination]. |

ResourceNotFoundException

Resource Definition is Not Found. Name:[<Global Resource Name>] |

The resource definition selected in [Destination] cannot be found. |

Verify the global resource specified in [Destination] |

| java.net.UnknownHostException |

This exception occurs when the PROXY server specified in the global resource cannot be found. |

Verify the condition of the PROXY server. Or verify [Proxy Host] of the global resource specified in the [Destination]. |

java.net.SocketTimeoutException

connect timed out |

A time-out has occurred while connecting to Salesforce. |

Verify the network condition and Salesforce server condition. Or check [Connection timeout(sec)] of the global resource specified in the [Destination]. |

java.net.SocketTimeoutException

Read timed out |

A time-out has occurred while waiting for a responce from the server after connecting to Salesforce. |

Verify the network condition and Salesforce server condition. Or check [Timeout(sec)] of the global resource specified in the [Destination]. |

| jp.co.headsol.salesforce.adapter.exception.SalesforceAdapterIllegalArgumentException |

Invalid value is set for the property of SalesforceBulk adapter. |

Check the error message, and verify the settings. |

| com.sforce.soap.partner.fault.LoginFault |

Login to Salesforce has failed. |

Check the ExceptionCode or error message, and refer to the information about this type of error in Salesforce-related documents etc. |

| com.sforce.async.AsyncApiException |

An error has occurred in the batch or job executed in the SalesforceBulk adapter. |

Check the ExceptionCode or error message, and refer to the information about this type of error in Salesforce-related documents etc. |

Limiting the settings to only items needed to be written to Salesforce (deleting unnecessary items from the Schema Definition) helps improve processing performance.

Limiting the settings to only items needed to be written to Salesforce (deleting unnecessary items from the Schema Definition) helps improve processing performance. Items whose data type are defined as "base 64" cannot be handled. If these items are selected, an error occurs.

Items whose data type are defined as "base 64" cannot be handled. If these items are selected, an error occurs. For details, please refer to Relationship Definition.

For details, please refer to Relationship Definition.