Field descriptions

Request acknowledge port No. (port)

This specifies the port number used by the DataMagic service to wait for requests from other hosts.

In DataMagic Server, the default value is 36000. Specify a port number that is not used by the OS or any other products.

For the DataMagic Desktop grade, the Request acknowledge port No. (port) is set to 0. Do not change this value.

Local host kanji code type (knjcode)

This specifies the kanji code type of the system in use.

It is referenced by the DataMagic service (huledd), the command for executing data processing (utled), and the utilities.

If you change the code type for your local host, the kanji code type used in the system log is also changed.

In Windows, the value is fixed to Shift JIS.

|

Kanji code type of the system |

Value set in file |

|---|---|

|

Shift JIS code |

S |

|

EUC code |

E |

|

UTF-8 code |

8 |

If the character code (system locale) in Windows is UTF-8, the following are output in UTF-8:

-

Messages that are output by the command for executing data processing (utled command) and utilities.

-

Contents of error files that are output during data processing.

-

Causes of skipped errors that are output during data processing.

-

Contents of trace log files that are output during data processing.

-

Contents of export files that are output when you execute the command for exporting management information (utledigen command) with "-k" omitted.

Trace output mode (trace_output_mode)

This specifies the trace log output mode.

For details about the log output function, see DataMagic Error Codes and Messages.

|

Trace output mode |

Value set in file |

|---|---|

|

None |

0 |

|

Start, end, error, warning |

1 |

|

Start, end, error, warning |

2 |

|

Start, end, error, warning |

3 |

|

Start, end, error, warning, debug |

9 |

Trace changeover method (trace_switch_mode)

This specifies the trace log changeover method.

|

Trace changeover method |

Value set in file |

|---|---|

|

Do not change over |

0 |

|

Change over after exceeding a specified file size |

1 |

Trace changeover size (trace_switch_size)

This specifies the timing for switching to a new trace log file. The value can be from 10 to 100. The unit is megabytes (MB).

File changeover will be performed when the trace log reaches the specified size.

For details about the log output function, see DataMagic Error Codes and Messages.

No. of trace generations retained (trace_keep_cnt)

This specifies the number of trace log file generations managed. The value can be from 1 to 99.

For details about the log output function, see DataMagic Error Codes and Messages.

Syslog or event log output (syslog_output)

This specifies whether to output the trace log to the event log or the system log (syslog).

|

Syslog or event log output |

Value set in file |

|---|---|

|

Do not output |

0 |

|

Output |

1 |

Trace directory (log_dir)

This specifies the directory to which the trace log file is output.

Use a maximum of 200 bytes of characters.

You cannot use external characters. This item must be specified unless the trace output mode is 0.

For details about the log output function, see DataMagic Error Codes and Messages.

Work file directory (work_dir)

This specifies the directory to which temporary files are output.

Use a maximum of 200 bytes of characters.

In Windows, you cannot use external characters.

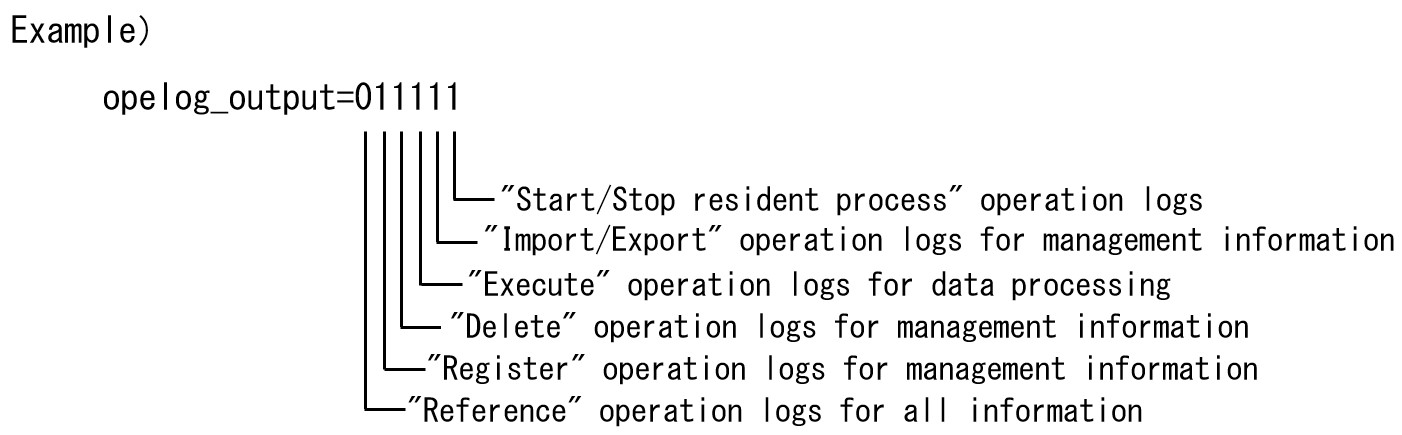

Operation log to output (opelog_output)

This specifies whether to output operation logs to Operation Log List.

You can specify each operation type by using a six digit number made up of "0"s and "1"s.

The digits of the number represent the following operations from left to right respectively:

"Reference" for all information, "Register" or "Delete" for management information, "Execute" for data processing, "Import/Export" for management information, and "Start/Stop resident process".

0: Do not output logs related to the operation

1: Output logs related to the operation

If you specify the above, "Reference" operation logs for all information are not output to Operation Log List, but the following logs are output to Operation Log List:

- "Register" operation logs for management information

- "Delete" operation logs for management information

- "Execute" operation logs for data processing

- "Import/Export" operation logs for management information

- "Start/Stop resident process" operation logs

Generate an error if the user ID and password are the same (sec_userpass_same)

This specifies whether to generate an error if the user ID and password are set to the same value when user information is registered.

|

If the user ID and the password are the same |

Value set in file |

|---|---|

|

Generate an error |

Y |

|

Do not generate an error |

N |

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Generate an error if the user ID consists only of numerals (0-9) (sec_user_numonly)

This specifies whether to generate an error if the user ID is set by using only numerals (0-9) when user information is registered.

The set values are the same as for Generate an error if the user ID and password are the same. See Table 5.9.

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Generate an error if the password consists only of numerals (0-9) (sec_pass_numonly)

This specifies whether to generate an error if the password is set by using only numerals (0-9) when user information is registered.

The set values are the same as for Generate an error if the user ID and password are the same. See Table 5.9.

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Generate an error if the user ID consists only of letters in the English alphabet (lowercase or uppercase) (sec_user_alphaonly)

This specifies whether to generate an error if the user ID is set by using only lowercase or uppercase letters when user information is registered.

The set values are the same as for Generate an error if the user ID and password are the same. See Table 5.9.

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Generate an error if the password consists only of letters in the English alphabet (lowercase or uppercase) (sec_pass_alphaonly)

This specifies whether to generate an error if the password is set by using only lowercase or uppercase letters when user information is registered.

The set values are the same as for Generate an error if the user ID and password are the same. See Table 5.9.

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Minimum number of characters in a password (sec_pass_min)

This specifies the minimum number of characters used to set the password when user information is registered. The value can be from 1 to 20.

This field is used in the DataMagic Server grade. In the DataMagic Desktop grade, this field is ignored.

Output data processing log (ed_log_output)

This specifies whether to output the data processing log.

|

Data processing log |

Value set in file |

|---|---|

|

Do not output |

0 |

|

Output |

1 |

Log entries retained (log_del_border)

This specifies the number of data processing log entries to retain. The value can be from 0 to 999999999. If 0 is specified, log entries will not be deleted.

Output preference for error file log (ed_err_output_level)

This specifies the preference to output the log to the error file.

|

Output preference for error file log |

Value set in file |

|---|---|

|

Do not output |

0 |

|

Errors only |

1 |

|

All |

2 |

Error file name (ed_err_output_file)

This specifies the error file name. Be sure to specify the file name if you want to output the data processing log. Otherwise, no data processing log will be output, regardless of the output frequency for error file log value.

Use a maximum of 200 bytes of characters. You cannot use external characters.

Output the content of DataMagic error files to syslog (in UNIX) or event logs (in Windows) (ed_err_syslog_flag)

This specifies whether to output the error file contents to the event log

|

Output error files to the syslog |

Value set in file |

|---|---|

|

Do not output |

0 |

|

Output |

1 |

Error file generation method (ed_err_output_mode)

This specifies how to generate an error file when the file having the specified error file name is already present.

|

Error file generation method |

Value set in file |

|---|---|

|

Overwrite existing file |

R |

|

Append to existing file |

M |

Error file changeover size (MB) (ed_err_switch_size)

This specifies when to change over to a new error file. The value can be from 0 to 99. The unit is megabytes (MB). If 0 is specified, no error file changeover will be performed.

File changeover is performed when the error file reaches the specified size.

Log output format (ed_err_format)

This specifies the data processing log output format.

For details about the output format character string, see the description about the "format" parameter in the "utledlist (Command to output the conversion log)" section in DataMagic Reference Manual.

Path of environment settings shared by HULFT products (hulsharepath)

Set the path if you perform the exclusive control for the same file between the DataMagic or HULFT on other host. You need to specify the same directory path on all hosts running the HULFT family products where locking is to be performed.

If the path you set here does not match between the hosts while executing such as the input/output processing, the target file may be corrupted or the file in the middle of writing may be read because exclusive control is not applied correctly.

Specify the correct path.

Local file lock mode (localfile_lockmode)

In which DataMagic is installed on the file server that contains files shared as network files with other hosts, for the DataMagic instance installed on the file server, set Mode 1. In which DataMagic that do not shared files with other hosts, we recommend to set Mode 0.

This field is enabled when DataMagic starts.

|

Local file lock mode |

Value set in file |

|---|---|

|

Mode 0 (Do not use a network file lock on local files.) |

0 |

|

Mode 1 (Use a network file lock on local files.) |

1 |

Language (huledlang)

In DataMagic Server grade, this field specifies the language used for DataMagic Server operations (such as trace log output).

In DataMagic Desktop grade, this field specifies the language used when performing operations (such as trace log output) and displaying text on screens in the DataMagic Desktop.

In addition, when outputting to Excel files, the following fonts are used.

-

If JPN is selected: MS Pゴシック

-

If ENG is selected: Calibri

If you change the language during operation, multiple languages will be mixed in the trace log. If you select a different language from the one used before migration, multiple languages will be mixed in the trace log.

|

Language |

Value set in file |

|---|---|

|

Japanese |

JPN |

|

English |

ENG |

Date format (datefmt)

In the DataMagic Server grade, this field specifies the date format for DataMagic Server operations (such as trace log output).

In the DataMagic Desktop grade, this field specifies the date format for DataMagic Desktop operations (such as trace log output) and screen display.

If you omit the value, YMD (1) is used.

If you change the date format during operations, multiple date formats will be mixed in the trace log. If you select a different date format from the one used before migration, multiple date formats will be mixed in the trace log.

|

Date format |

Value set in file |

|---|---|

|

YMD (YYYY/MM/DD) |

1 |

|

MDY (MM/DD/YYYY) |

2 |

|

DMY (DD/MM/YYYY) |

3 |

Use multithreading in data transformation (ed_use_thread)

This field is used to specify whether to execute data processing in multithreaded processes. If you specify the setting to execute data processing in multithreaded processes, the processing completes faster because system resources are used more efficiently compared to single threaded process. Note that, however, such performance is not guaranteed, and depends on the environment to be used, the file formats, and the contents of files.

In step execution and test execution, data processing is always performed in a single-threaded process.

|

Execution in multithreaded processes |

Value set in file |

|---|---|

|

Do not use multithreading |

0 |

|

Use multithreading |

1 |

The priority is given in the order below when multithreaded processes are enabled. If settings with higher priorities are applied when performing data processing, settings with lower priorities are ignored.

-

Arguments for the utled command (-thread)

-

Options listed in the Data Processing Settings screen

-

Use multithreading field (ed_use_thread) in the system environment settings file (huledenv.conf)

Limit of record group division in sort/merge algorithm (ed_sort_merge_recordcount)

This is the number of divided record groups per thread to be used to perform a sort and merge. Change this value when you want to tuning. Records are processed in units equal to the specified value. We recommend specifying a large value when memory is sufficient, and a small value when the amount of memory available is small. When memory is insufficient, the data processing stops.

We recommend determining the value based on actual measurements.

The range of possible values is from 500000 (five hundred thousand) to 999999999 (nine hundred ninety-nine million, nine hundred ninety-nine thousand, nine hundred and ninety-nine). The initial value and the default value are both 5000000 (five million).

Encoding of input and output files (cs4file)

Specify the initial values of the combo box that is used to select the kanji code when data processing information is created.

- S:

-

SHIFT JIS

- J:

-

JEF

- E:

-

EUC

- I:

-

IBM Kanji

- K:

-

KEIS

- N:

-

NEC Kanji

- 6:

-

UTF-16

- 8:

-

UTF-8

- Z:

-

JIS

In the DataMagic Desktop grade, the specified kanji code is used for input and output. In the DataMagic Desktop grade, only "S: Shift JIS" and "8: UTF-8" can be specified.

Treat a line break as part of a field (ed_csv_linebreak)

Specify whether to treat a line break inside a field value that is enclosed by CSV enclosure characters as data.

The settings for ed_csv_linebreak are enabled when the input format is specified as CSV in the data processing information.

If you omit the settings, 1 (Yes) is selected.

|

Treat a line break as part of a field |

Value set in file |

|---|---|

|

Do not treat |

0 |

|

Treat |

1 |

Escape enclosure characters inside an enclosed text (ed_csv_enclosefields)

Specify whether to escape enclosure characters inside an enclosed field in CSV.

- When the input format is CSV

-

When an enclosure character inside a field is output, the character is doubled, as in "". However, as an exception, when a value of type I is output, the value is output as it is.

- When the output format is CSV

-

When an enclosure character inside a field is output, the character is doubled, as in "".

However, as an exception, when a value of type I is output, the value is output as it is.

The setting is not applied in any of the following cases:

-

The input or output format is specified as CSV and the Enclosure character field is specified as None in data processing information.

-

An enclosure character is inside a field of input data, and the character is not doubled.

The default value is 1 (Escape).

|

Escape enclosure characters inside an enclosed text |

Value set in file |

|---|---|

|

Do not escape |

0 |

|

Escape |

1 |

Treat a null character as part of a field (ed_fmt_null_flag)

Specify whether to allow a NULL character (0x00) to be part of a field value in the format.

We recommend replacing a NULL character with a space if possible. To replace a NULL character with a space, specify Replace in the Replacement of null characters with spaces field of the data processing information.

|

Treat a null character as part of a field |

Value set in file |

|---|---|

|

Do not treat |

0 |

|

Treat |

1 |

The settings in the Data Processing Settings screen override the settings in other information screens concerning whether to treat a NULL character as part of a field value. For example, when one of the following is specified in a data processing information option, even if 1 (Yes) is set for "Treat a null character as part of a field (ed_fmt_null_flag)" in the system environment settings file, the setting in the Data Processing Settings screen takes priority:

-

Null character present in fixed-format character data is selected as the stop condition.

-

For the input behavior, in Replacement of null characters with spaces, Replace is selected.

-

For merge processing, specify whether to treat the actual data, a merge key, or a duplicate priority key as a NULL character. The types of fields applicable for the keys are the same as for sort processing. Applicable data types are the type M and type X.

-

For matching processing, specify whether to treat the actual data and a matching condition as a NULL character. The matching condition is enabled when Character String is selected in the Type field, and Equals or Does not equal is used for the operator. Applicable data types are the type M and type X.

-

For a virtual table, the setting for whether to treat such data as a NULL character is not applicable. Because conversion to Kanji characters occurs inside the shift code of type N or type M, characters are likely to be garbled if the shift code includes a NULL character. For example, bytes might move and everything will be the default code.

.Use control characters inside a fixed value (ed_fixvalue_ctrlchar_flag)

Specify whether to treat the specified control character inside a fixed value as a character string or as a control character.

The values to be treated as control characters are line breaks (\n), returns (\r), horizontal tabs (\t),and hexadecimal expressions (\xHH). This option does not apply to any other characters.

Example: You select Set Mapping Information, Output Information Settings, Fixed Value, Fixed character string, and then specify \n (line break).

|

Specified Value |

Do not use |

Results |

|

|---|---|---|---|

|

Output data (hexadecimal number) |

Output preview |

||

|

A\nB |

Do not use |

A\nB (415C6E42) |

A\nB |

|

Use |

A B (410A42) |

A\nB |

|

|

Do not use |

Value set in file |

|---|---|

|

Do not use (*1) |

0 |

|

Use |

1 |

|

*1 |

: |

During data processing, a fixed value is converted from UTF-8 to the character encoding specified in the input settings, and then it is converted to the character encoding specified in the output settings. Therefore, even if control characters are inserted inside a string of the type X or type M, the output will be different. If you want to output control characters without any changes, use a character string of the type I. |

Allow field type differences during data comparison (compare_different_type_data)

Specify whether to allow comparisons between values of different types (such as character strings and numeric values), or whether to raise an error in an extraction condition.

If the field is set to 0, values of different types are not compared, and as a result records are not extracted even if they have the same key value as in an extraction condition. This behavior is obtained in the compatible mode of HULFT-DataMagic Ver.2.

If the field is set to 1, values of different types are compared, and as a result records are extracted if they have the same key value as in an extraction condition. For example, the character string "1234" is treated as the same value as the numeric value "1234".

If the comparison source is a string and the comparison target is a numeric value, the string value will be converted to a numeric value for comparison.

If the comparison source is a numeric value and the comparison target is a string, the numeric value will be converted to a string for comparison.

Note that the behavior is different from that in HULFT-DataMagic Ver.2.

|

Allow field type differences during data comparison |

Value set in file |

|---|---|

|

Do not allow |

0 |

|

Allow |

1 |

Treat a prefix and a dot within a table name as a schema qualifier (treat_dot_as_schema)

Specifies whether to treat, in the Simple of the Database Table - Detailed Information screen, a substring before a dot (.) together with the dot inside a value of the Table name field as the schema qualifier.

When the field is set to 0, even if a dot is inside a value for the Table name field, the entire value is treated as a table name.

When the field is set to 1, if the value of the specified Table name contains a period, the text up until the first period is treated as the schema name, and the text after the first period until the end of the string is treated as the table name.

If there is no dot, the entire Table name value is treated as the table name.

This setting is disabled in ODBC.

|

Handling of control a dot within a table name |

Value set in file |

|---|---|

|

Do not treat a dot as a schema qualifier |

0 |

|

Treat a dot as a schema qualifier |

1 |

Japanese era name (era_name)

This will allow you to specify as necessary a Japanese era name that will come into effect after the Heisei era. Up to five Japanese era names can be specified. When specifying more than one Japanese era name, specify the dates from left to right starting with the oldest date.

Specify dates in the following format.

era_name = YYYYMMDD,E,EE;YYYYMMDD,E,EE;...

|

Components |

Description |

|---|---|

|

YYYYMMDD |

Specify the start date of the era by using eight numeric value halfwidth characters. This value takes effect when all of the following conditions are met:

Note, the date format (datefmt) does not affect the value for YYYYMMDD. |

|

E |

The initial of the Japanese era name. Specify this by using one halfwidth uppercase letter. |

|

EE |

The character string of the Japanese era name. Specify this by using two multibyte characters. Specify this value in UTF-8. |

|

Comma (,) |

The delimiter that divides the values for YYYYMMDD, E, and EE. This cannot be omitted. |

|

Semicolon (;) |

The delimiter that divides each Japanese era when multiple Japanese era settings are specified. This can only be omitted at the end of a line. |

Example of settings for Japanese era

- Character encoding:

-

UTF-8

- Start date of the new Japanese era:

-

20190501

- Initial of the new Japanese era:

-

Z

- Character string of the new Japanese era:

-

世存

era_name = 20190501,Z,世存

Note: Specify "世存" in UTF-8.